Table of Contents

Introduction

In the current AI landscape, the applications of AI in text and image generation have flourished, with related technologies constantly advancing. However, in comparison, AI video generation remains an area that has yet to see breakthrough developments. There are two main types of video generation: Text-to-Video and Image-to-Video, which generate videos based on text and images, respectively, using generative AI models.

As the technologies of text-to-image and image-to-image generation continue to improve and mature, the development and attention towards text/image-to-video have gradually evolved and increased. The underlying technical frameworks for text-to-image and text/image-to-video are quite similar, primarily involving three main pathways: GANs (Generative Adversarial Networks), autoregressive models, and diffusion models.

Below are some commercial video generators as well as open-source video generation projects:

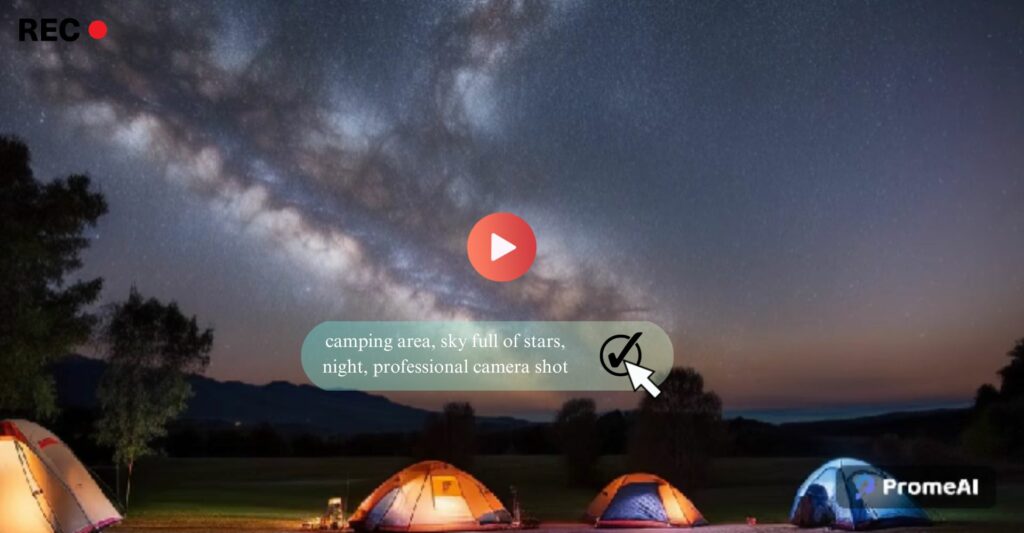

1. PromeAI Video Generator

PromeAI‘s video generation algorithm is based on the proprietary algorithm developed by LibAI Lab. It has the ability to generate high-quality videos that are both realistic and coherent. The operation is also incredibly simple, requiring just a single sentence description to generate the video.

In addition to supporting text-based video generation, PromeAI also offers support for image processing functionalities such as text-to-image generation, image-to-image generation, background replacement, outpainting, inpainting, and other popular features.

With years of research in AI image/video domains, LibAI Lab has garnered a user base of over five million users per month, making it one of the most promising artificial intelligence companies.

2. Runway

Runway is a leading provider of image and video editing software based on generative AI. It is currently one of the commercially available video generators for consumer use. The company was founded in 2018 by Cristóbal Valenzuela, Alejandro Matamala, and Anastasis Germanidis. Initially focused on image transformation, Runway has expanded into video editing and generation within the AI and computer graphics domain.

Runway’s technology has found wide applications in the film, television, and advertising industries. The visual effects team behind the Oscar-winning film ‘Everything Everywhere All At Once’ utilized Runway’s technology to help create certain scenes. In June, Runway introduced its first batch of commercially available text-to-video models, Gen-2. These models can generate videos based on text and images and are currently available for free trial. Runway offers users both free trial credits and subscription options.

3. Pika Labs

Just a few days ago, Pika 1.0 was released and quickly gained widespread attention. The company behind it, Pika, was established in April this year, and after more than six months of testing within the Discord community, they believed it was time for this significant upgrade. Compared to its previous version, Pika 1.0 offers even more features.

It not only generates videos based on text or images but also allows for partial editing of videos. Despite being established only six months ago, Pika has already amassed over 520,000 users. Moreover, it recently secured a funding of $55 million, with notable individual investors including Adam D’Angelo, the founder of Quora, Aravind Srinivas, the CEO of Perplexity, and Nat Friedman, former CEO of GitHub.

4. Invideo AI – AI Video Generator

Invideo AI is an AI video generator that empowers users to effortlessly create professional-looking videos. Leveraging advanced artificial intelligence, Invideo AI streamlines the video production process, enabling individuals and businesses to transform their ideas into engaging visual content. With a user-friendly interface and a vast library of pre-designed templates, it caters to both beginners and seasoned video creators. The platform facilitates easy customization, allowing users to add text, images, and music, enhancing the overall visual appeal. Invideo’s AI capabilities automate certain aspects of video editing, saving time and effort. Whether for social media, marketing, or storytelling, Invideo AI makes video creation accessible and efficient.

5. Moonvalley Video Generator

Moonvalley, a generative AI company incubated by Y-Combinator, has released a powerful text-to-video generator. With just text prompts, it can generate high-definition, 16:9 cinematic-quality videos. Currently in the beta stage, Moonvalley offers its services completely free and utilizes Discord as its user interface. The system currently provides five video styles, such as anime, fantasy, realism, and 3D animation. Users can also choose from short (approximately 1 second), medium (3 seconds), and long (5 seconds) video durations.

6. LTX Studio

LTX Studio is presented as a holistic AI video platform by Lightricks, designed to address the challenges in creating longer-form AI-generated films or videos by consolidating external requirements under one roof. It signifies a breakthrough in video production, offering tools for text-to-video conversion, camera controls, and editable scenes, demonstrating how it streamlines the creative process by integrating various aspects of filmmaking into a single platform.

7. Morph Sudio

Morph Studio is currently in the early stages of entrepreneurship. The company was founded in April of this year and recently completed a seed funding round worth several million dollars in May. The team’s video generator is based on diffusion models training. This model ensures both visual consistency and accuracy in generating videos from text prompts.

Morph Studio is targeting the application direction of short videos. The company aims to develop a consumer-oriented community product and is currently validating their technology and requirements through Discord. Additionally, Morph plans to explore user-preferred themes and community culture through online communities, conducting in-depth analysis on different topics and content, ultimately creating their own video community.

8. Neverends Video Generator

On December 1st, the rising star in AI video, NeverEnds, unveiled a significant update with its 2.0 version, introducing the feature of image-to-video generation and supporting mobile experience. Currently, NeverEnds offers two major functionalities: text-to-video generation and image-to-video generation.

In the official description of NeverEnds, it highlights the distinctions between NeverEnds and other top-tier AI video tools like Runway and Pika: 1. to create AI videos with enhanced realism and practicality 2. to strengthen the portrayal of characters in AI videos 3.to lower the learning curve and support AI video production with longer durations and a wider range of aspect ratios.

9. Stable Video Diffusion

In November, the video generator called Stable Video Diffusion, based on Stable Diffusion, made its debut, sparking discussions within the AI community. This model allows users to generate a few seconds of video based on static images. Derived from the Stable Diffusion text-to-image model developed by Stability AI, Stable Video Diffusion has become one of the few video generators available in open-source or commercial domains.

Stable Video Diffusion is released in two forms of image-to-video models, capable of generating videos with customizable frame rates ranging from 3 to 30 frames per second, resulting in 14 to 25 frames of video. Stable Video Diffusion is a member of Stability AI’s diverse family of open-source models, spanning across various modalities such as images, language, audio, 3D, and code.

10. Animatediff Video Generator

AnimateDiff is a Text-to-Image Diffusion framework that enables the generation of personalized animated images from text, resulting in a two-dimensional AI anime-style video effect. Its key feature is its ability to adapt to most existing personalized text-to-image models without requiring specific adjustments or training.

To preserve the fine-tuning capabilities of the original text-to-image model, AnimateDiff incorporates an action modeling module within the image generation model and learns reasonable motion priors from video data. Compared to existing methods, AnimateDiff exhibits superior continuity in the generated animations due to its ability to learn reasonable motion priors from a large corpus of videos.

11. Midjourney Video Generator

Midjourney is one of the most successful AI image commercialization websites to date. Given the intense competition in the field of video generation, it is highly likely that Midjourney will launch its own Video Generator in its V6 version.

12. Emu Video Generator

Meta recently unveiled two groundbreaking research projects, Emu Video and Emu Edit. Emu Video is a text-to-video generation method based on diffusion models, capable of generating high-quality videos in a step-by-step manner. Videos processed with Emu Video exhibit a remarkable level of stylization, bringing images to life with added motion.

13. Wonder dynamics

Wonder Dynamics has launched a fully automated CG production tool called Wonder Studio. With just a single camera, this tool can automatically analyze and capture live performances and transform them into high-quality animations. It eliminates the need for complex 3D software and production processes, and it seamlessly integrates CG characters with real-world settings, achieving a perfect blend between the two.

14. Meta Make-A-Video

Make-A-Video, a video generator under Meta, is a text-to-video generation model that leverages paired text-image data to understand the world’s appearance and how it is described, while also learning how the world moves from unsupervised video clips. By not requiring the model to learn visual and multimodal representations from scratch, the training of text-to-video generation models is accelerated.

The video generator successfully extends the text-to-image generation model based on diffusion models to a text-to-video generation model. Videos generated from non-paired text-video data inherit the wide-ranging capabilities of the image generation model, including aesthetic diversity and imaginative depictions.

15. ByteDance MagicAnimate

MagicVideo is an efficient text-to-video generation framework based on the latent diffusion model, proposed by ByteDance. It can generate smooth video clips that are consistent with the given textual descriptions. The core of MagicVideo lies in keyframe generation, where the diffusion model approximates the distribution of 16 keyframes in a low-dimensional latent space.

The 3D U-Net decoder, equipped with an efficient video allocation adapter and a targeted temporal attention module, is employed for video generation. MagicVideo is capable of producing high-quality video clips with realistic or fictional content, making it suitable for various commercial scenarios. It can be applied in popular software platforms like Capcut and Tiktok.

16. NVIDIA Video LDM

Video LDM initially pretrains the LDM (Latent Diffusion Model) solely on images. By introducing the temporal dimension to the latent space and fine-tuning the encoded image sequences (i.e., videos), the image generator is transformed into a video generator. The diffusion model samplers are temporally aligned to convert them into temporally consistent video super-resolution models. This approach efficiently and effectively converts publicly available state-of-the-art text-to-image LDM (Latent Diffusion Model) into a highly expressive and efficient text-to-video model, capable of achieving resolutions of up to 1280×2048.

17. Microsoft NUWA-XL

NUWA is an AI drawing tool jointly developed by Microsoft Research Asia, Peking University, and Microsoft Azure AI. This powerful product supports various functionalities, including text-to-drawing, text-to-image, outline-to-image, image augmentation, image-to-video, and video prediction. It can generate high-resolution images of any size to accommodate different devices, platforms, and scenarios. One notable addition to NUWA is NUWA-XL, which utilizes an innovative Diffusion over Diffusion architecture to parallelly generate high-quality, extra-long videos. With just 16 simple descriptions as input, it can generate animated videos up to 11 minutes in length.

18. Cogvideo

CogVideo by Tsinghua University: The First Open-Source Chinese Text-to-Video Generation Model, based on Autoregressive Modeling. CogVideo was the largest and first open-source text-to-video generation model at the time, specifically designed to support Chinese prompts. With an impressive parameter count of 9.4 billion, the model exhibits remarkable alignment between text and video clips, resulting in significantly improved video quality and accuracy. Compared to previous models, CogVideo is capable of generating videos at higher resolutions (480×480).

19. Google Imagen video

Imagen Video is a text-conditioned video generation system based on cascaded video diffusion models, developed by the Google team. It not only generates high-fidelity videos but also offers a high level of controllability and world knowledge. The system can generate various videos and text animations in different art styles, showcase an understanding of 3D structures, and present diverse textual content with different styles and dynamics.

20. Google Phenaki

Phenaki, developed by Google Research, is the first model capable of generating videos from open-ended temporal prompts. It can generate variable-length videos based on a series of open-ended text prompts. Phenaki generates videos that exhibit temporal coherence and diversity conditioned on open-ended prompts, even handling new concepts that may not exist in the dataset. The model demonstrates the effectiveness of joint training on image and video data to enhance the quality and diversity of generated content. By training on a large number of image-text pairs and a smaller set of video-text examples, Phenaki achieves generalization beyond the available video datasets. However, the generated images have a lower resolution.

21. Zeroscope

Zeroscope is a text-to-video generation model within the ModelScope community. The Zeroscope_v2 large model has been open-sourced on Hugging Face. This model is a derivative of the ModelScope-textto-video-synthesis model, which has a parameter count of 1.7 billion. By making the model open source, it benefits from collective intelligence, accelerating its development and iteration while encouraging community participation. Currently, other text-to-video generation models are not available to end-users. However, due to the model’s open-source nature, lack of corporate or team support, and an unclear path for commercialization, the future development of Zeroscope is uncertain.

22. Rephrase.ai

On November 23rd, Adobe confirmed its acquisition of AI startup Rephrase.ai, known for their AI technology that transforms text into virtual image videos. Adobe’s acquisition of Rephrase.ai reflects the growing trend of AI-generated content expanding beyond text and images to more complex forms like videos. Recent advancements in related products and technologies indicate a rising interest and competition in this field.

Update: Adobe Premiere Pro Unveils Generative AI!

Conclusion

In addition to these, a significant number of algorithms and models were intensively released towards the end of 2023. These include Li Feifei’s video generation model, W.A.L.T, Tencent’s AnimateZero, Meitu’s Miraclevision 4.0, and Microsoft’s GAIA. It is evident that in the upcoming year, 2024, video generation will be one of the hottest tracks in the field of AI.

Continuous Updates:

Following the introduction of various tools, the latest updates to AI-generated video platforms further elevate the possibilities for content creators. These advancements now offer unparalleled precision in character animations and an expanded array of customization, all accessible through intuitive interfaces:

23. OpenAI Sora

Building on the discussion of innovative tools, OpenAI’s Sora stands out as a cutting-edge AI video generator. Sora excels by transforming text descriptions into coherent, fluid videos up to 60 seconds long, featuring intricate scenes, vivid character expressions, and complex camera movements. This capability to produce longer, high-fidelity content marks a significant advancement over other generators limited to mere seconds of video.

Moreover, Sora has made substantial strides in video realism, length, stability, consistency, resolution, and text comprehension, setting a new benchmark in the realm of AI-generated video content.”

24. PixVerse

PixVerse is a free AI video generator that supports 4K resolution, as an alternative to Pika AI. It can generate high-quality videos from text or images, including real-life videos, animations, and 3D cartoon videos. Users can choose the style and video size to create watermark-free videos. In addition to text, it can also create animations of landscapes, animals, and more by uploading images.

25. Heygen

Heygen, an abbreviation for “Hey, generative AI,” is a pioneering platform that leverages the power of artificial intelligence to generate realistic avatars and videos. This innovative service is designed to simplify the process of creating high-quality, personalized content for various digital applications. With a focus on user-friendly design, Heygen empowers users to bring their creative ideas to life, regardless of technical expertise.

26. Haiper

Haiper AI is a cutting-edge video creation platform that leverages artificial intelligence to transform text and still images into captivating HD videos. It simplifies the high-quality video production process, empowering creators to effortlessly tell engaging stories.